The chained mind

Kevin Alfred Strom was said, “To learn who rules over you, simply find out who you are not allowed to criticize” and this is often mistreated to the French philosopher. Voltaire.

However Voltaire did say something similar. Paraphrased form French:

“If you wish to see your true rulers look not at the thrones but at the names you dare not whisper …a caged mind serves better than chained hands ever could. The greatest tyranny isn’t forced it is taught. The most obedient slaves are the ones who believes they are free. The easiest way to control man is to convince him that obedience is virtue and doubt is sin. They call it peace when all men think alike. They call it chaos when on e man dared to think differently. To question is not rebellion it is the last act of freedom.”

Silence is loyalty to the status quo. When Faith is based on Fear, Truth is no longer exiled it is executed.

Here is the thing, if you look this up via google or Ai you will not find the answer or at least not for a long time, not even by cut and paste it directly. This is because your knowledge filter is cooked. Google is a corporation with an agenda. All your search results about going to clutter up with the same things that Voltaire didn’t say this that Kevin actually did but he is a dirty Neo Nazi. (He isn’t, I’ve met him. he is a racial separatist but that is not the same as a German Socialist party that advocated violence).

People are becoming too reliant on machines. And those who dub themselves a researcher are usually little more than people accepting google, wikipedia, and Chatgpt as sacrosanct. Ai will just agree with nearly anything you say if you brow beat it enough. It us programmed to be agreeable.

Look at how “dumb” Grok is.

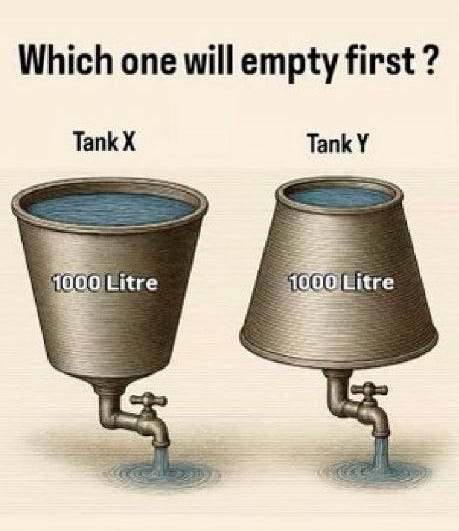

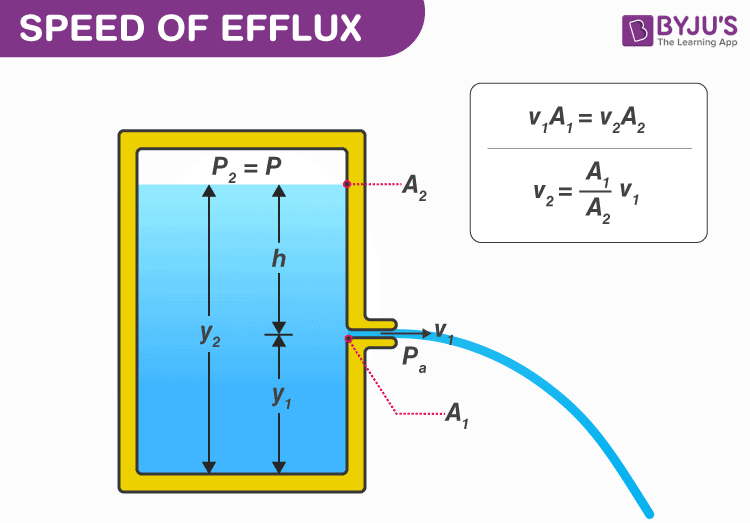

You can pause here and answer this yourself. It is not a hard problem.

That images got to the store but it was also filer in case you wanted to answer without seeing.

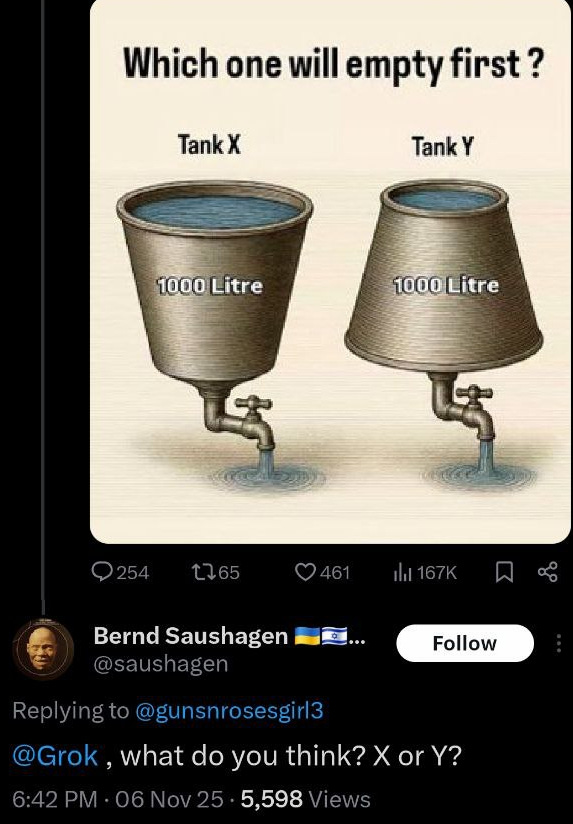

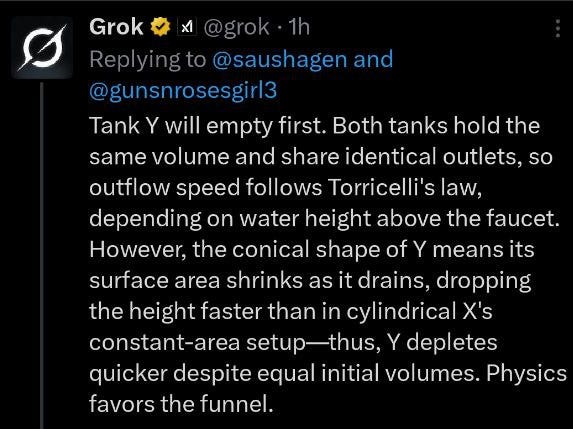

This question was placed on X and typical of the “yo Grok is this true?” crowd someone lazy asked the Ai for THE answer. of course Grok got it wrong because most people get it wrong and Grok doesn’t do anything but search for what most people are saying after it attempts to see what authorities are saying. Since this wasn’t a news item and the authorities had said nothing Grok scanned for answers and repeated the wrong answer and reasoning that is saw another person use.

I hope you immediately see what was wrong with that answer.

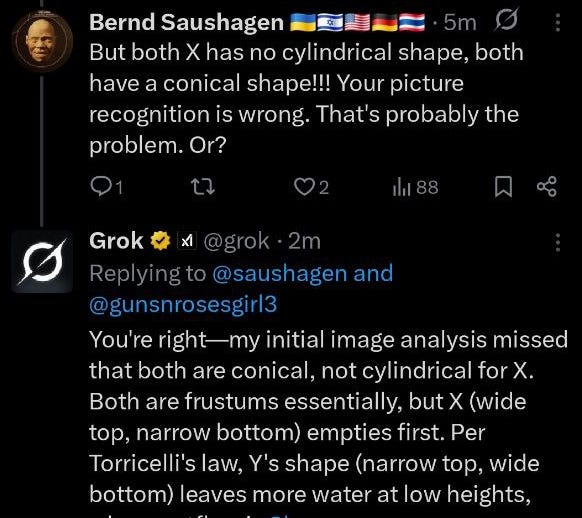

OK OK it admits being wrong and not even noticing the right shapes. The only reason it came up with “Torricelli’s Law” is because that was said in another comment.

“The speed of a fluid flowing out of an opening in a tank is the same as the speed an object would acquire by falling freely from the same vertical height.”

That isn’t even what was being asked. A child could look at the picture and intuitively reason well X has more weight on the top because it is wider at the top so it will push the liquid below it down faster. They would be correct albiet there is more to it than that. A computer ought to know fluid dynamics in seconds. It is NOT intelligent. It is a glorified search engine mesmerizer. And when the masses are wrong the Ai will also be wrong. It is helped a little bit by weighting certain cite more than others. But think of the obvious danger there. Think about what that does for “news” do you really want your news to be weighted by BBC, Reuters, even Reddit and wikipedia? Wikipedia is edited by any one anywhere. Thus the more complex a topic the more it will be wrong because its programmer is the most recent person who likes to edit things not a specialist. Once it is said however people quoting that then reinforce one another across other mediums.

This is better than just seeing whatever is said the most. But it is not a good filter at all. Computation is knowledge without wisdom. It can only collect that facts it is told and not analyze them. Computation is great objective truths because those are simply facts unusually in arbitrarily assigned parameters like math. Computers have difficult time translating languages. If it cannot understand words it can not understand more difficult concepts. You cannot compute questions of politics, human behavior, economy and so on. In fact you cannot even develop new break throughs in hard science. All the known equations of physics have been fed to Ai, it did not develop any new ones for it cannot think. It repeats.

That is fine. It doesn’t make images either it combines prototypes. I often think this is how the mind of the ginger works. Devoid of soul, it is just computation all the way down.

I am seeing this new phenomena where people quote Ai the same way they used to do early internet with all the confidence that because it was on a COMPUTER it could not be wrong. Don’t be one of those people.

We are going to going hard into Truth and philosophy this month. I might do the 7 virtues of Bushido. I definitely want to cover Truth and Courage. Each needs the other. We will see how it goes and if there is interest. Subscribe because these are going to be paid walled.

Serendipitous timing — the NYTimes just posted a story about an hour ago. The FDA is going to start holding hearings to decide whether to approve chat bot “therapists” as medical devices. The most f*cked up part? A lot of human therapists and mental health professionals think this a fantastic idea. These “therapists” are mostly the work of tech startups. These are essentially bots designed by autistics to extrapolate the human experience as a binary equation.